Cross-environment testing is viewed as a tedious and repetitive task, and it is generally a challenge to accommodate within the fast iterations of an agile life-cycle. I have worked as a tester on various agile teams and mostly in product-based companies, and I have seen first-hand the problems of cross-environment testing—but I have also seen the risks we face without it.

We were working on a desktop tool for Windows, working with SAP and using Microsoft Office, as well as supporting multiple languages. The tool was meant for complex technical scripts created by SAP experts, so functionality testing was in itself a complicated task. That, combined with verification of non-functional aspects like performance, run-times, usability and learn-ability, left us little time to concentrate on interoperability testing.

But we did understand the need for testing in different environments. We had a vast user base, and we often received issues specific to certain environments, so we knew we needed a strategy to incorporate more usability tests.

Here is how we simplified our cross-environment testing and made it easy to accommodate within our tight agile sprints schedule and release plan.

Get TestRail FREE for 30 days!

Testing Across OS Versions

We worked on Windows only, but even that wasn’t simple. We had XP, Windows 7, Windows 8, and some users were even on Vista, so we had to support all these operating systems, along with their 32- and 64-bit versions. Also, after a certain user support issue, we realized we needed to test with different language OS versions. So we had to list out our supported operating system versions as Win 7 EN 64-bit, Win 10 FR 32-bit, and so on.

The strategy we created was to name two main OS versions that would be taken as a baseline for our development and testing. Our test team’s systems would be set up with this baseline, and as a result, most tests would be performed on these base versions.

The analysis for this came from support and sales teams and was agreed upon by all stakeholders. The rest of the OS versions were listed out in a supplementary test environments list.

Supporting Systems Versions

Because our product used Microsoft Excel and Access, the testing of input and output to different versions of these products was important. We often faced issues with writing onto a certain Excel version or picking a certain “type” of data from Excel in different versions. But the question was, did the combination of Office with OS matter?

We did some research, and that was not the case with the issues we had seen. So the logical thing to do was cover the different Office versions—2003, 2007 and 2010—along with any corresponding OS. We just matched them logically: XP would generally have Office 2003 (with 32- or 64-bit, as per OS), and so on. For non-English systems, we installed non-English versions of Office, too.

Another dependency was the SAP system version installed. We were mandated to support four different SAP release versions, so that also got added to the environment dependency matrix. These too were made into logical combinations and listed in the test information.

Localization

Our tool was supported in four different languages, and switching to different languages was possible within the product. Consequently, that was important to test, especially for localized strings, errors and functionality in different scenarios, all of which needed to be verified in every sprint. We also had to keep in mind the increase in number of these supported languages in upcoming releases and plan a sustainable test strategy for coverage.

Planning and Strategy

All this cross-environment testing could make a simple user story very complicated. We needed to figure out a strategy so that within the normal three-week sprint, we could:

- Test the user stories at hand

- Regress the previous stories and features

- Ensure environment coverage

First we figured out a basic, minimum set of test cases that, when run, would perform a sanity check of the entire product, and we got a consensus on it from the development and business teams. This was called a regression pack for the legacy functionality we had.

Next, we decided that for every new user story we develop and test, we would identify a few basic tests from its test set and add them to the regression pack. Also, any features getting changed, modified or deleted would be updated in the regression pack. This was mandated for every tester, and I took the ownership for reviewing the updates every sprint so the regression pack would be a live document and not become obsolete.

We then asked the IT team to create relevant environments based on the list of combinations we had to support and test in our environment matrix.

Meanwhile, we discussed and negotiated with the product owner the frequency of tests to be performed on every test environment. Because we had two basic test environments on which most testing would be performed, these would be the actual configuration of our systems. So if we had four testers on our team, we got two systems each for the base test environments. The rest of the test environments from our matrix were the supplementary test environments.

After discussions with the stakeholders, it was agreed that we would cover all supplementary test environments at least once during testing within the release. So, if we had five sprints to plan, then we would be free to divide the supplementary test environments within those five sprints and run minimum tests as per the regression test pack. We were free to perform more tests as needed if we wanted to.

Lastly, we had the localization coverage. The product owner did not want us to spend the entire sprint testing the entire application in a new language, because that would be a huge effort and would impact other user stories of higher value. We decided to divide the languages among ourselves for the entire release.

The four testers split up ownership of French, German, Spanish and Portuguese. Within the sprint, whatever user story we tested or whatever regression we were performing, we would plan to test the application in our assigned language for at least one day. It did slow me down because I had to switch back and forth between English and the other language to understand some strings and errors, but it ensured basic sanity, tests from across different areas of the product, tests of new and old features, and finding localization issues as a by-product of other testing instead of a specific effort.

By the end of the release, we had spent a good amount of time testing the application in a different language (and even learned some basics of that language!) without spending dedicated time on localization testing.

After a couple of sprints, we realized that even running the basic regression pack on all supplementary test environments eventually would become a big task. So, in parallel, we started spending some time on automating our regression pack using a basic functional automation tool. After that, we were running these tests every sprint on the supplementary test environment, automatically.

At the end of the last sprint, we would typically have a user acceptance test cycle before release, and we took this time to look back on our environment coverage. If needed, we could decide to perform more tests or any pending tests on the supplementary test environments. This gave us buffer time to be sure about our supported test environments before release.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

Additional Ownership

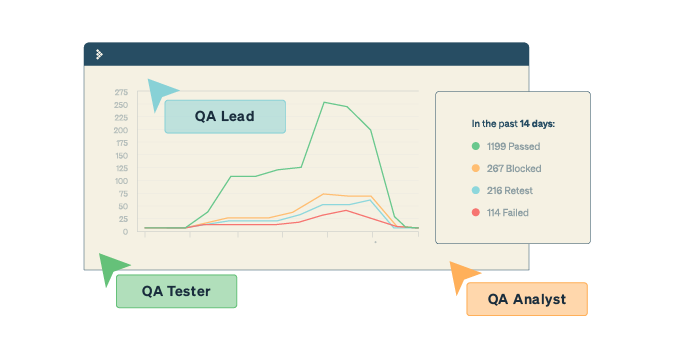

We realized that additional efforts were needed for managing the sequence and prioritization of our environment matrix, so we designated one owner to keep track of coverage of the test environments in each sprint. The owner would need to publish the coverage of the test environment matrix at the end of release. There was also a proposal to keep rotating this responsibility in upcoming releases.

We also realized over time that the effort needed to keep the test environment servers and virtual machines up and running needed to be divided too, and we could not forever rely on the IT team. Once we had the environments ready, we had to ensure that they were reachable and cleaned up after every use, so we assigned each tester a couple of test environments to maintain.

The owners had to keep track of their systems and keep them running with the latest software versions at the end of every sprint, and it also meant that the team had a single point of contact whenever we needed to test or regress something in that environment. Now, we have Docker, and such containers obviously make this task easier to manage.

Make Cross-Environment Testing Work for You

We can make cross-environment testing part of our regular agile testing without waiting for the UAT cycle or the end of release. Beginning with small steps and simple discussions, we can create a sustainable and scalable plan for ourselves, bringing in automation and other tools to support our strategy.

Nishi is a consulting Testing and Agile trainer with hands-on experience in all stages of software testing life cycle since 2008. She works with Agile Testing Alliance(ATA) to conduct various courses, trainings and organising testing community events and meetups, and also has been a speaker at numerous testing events and conferences. Check out her blog where she writes about the latest topics in Agile and Testing domains.

Test Automation – Anywhere, Anytime