I have been working in an automation-heavy context for the past six months. A group of two developers and a test specialist work together on nearly every change, and we also do a lot of test-driving, or writing a little bit of test code and then following that with the production code that will make the test pass. By the time we are ready to commit a change to GitHub and cut a pull request, we are also ready to go to production.

The whole process tends to move quickly, and if we don’t pay attention, it’s easy to lose track of what tests are at the unit level or the service layer, which tests run in the browser, and what was explored.

Here are three tips to help you make sense of test coverage in an automation-heavy environment.

for QA and Development Teams

1. Discover Coverage Classes

For any change I work on, we might make five or six different types of tests. The product I work on has a tool for uploading files, sometimes from a third-party software product, and assigns a label to help categorize those files. The labels are defined in part of the product that is hidden away to everyone except people with an administrator role.

We were working on a change that would allow a person to edit those file categorization labels. This change is fairly simple from a user perspective: Select a type, edit, save and see the name change propagate through our software. From the testing perspective, there is the view, controllers, models, the user interface and then plenty to explore in the browser.

How do we start, and how do we know we’re done?

We rely on a combination of static analysis and rules of thumb to know what to test and to describe that process to other team members. The software changes I see tend to deal either in data manipulation (CRUD transactions) or changing states.

In the example above, we want to test that a value can be set and updated, and we want to see how the system fails when the value is somehow set to NULL. Those tests address the code at a unit level. At the view, or user interface level, we want to know that fields are editable, that they can be updated and that the updates are reflected on the model. If we test-drive the change, we know when we are done because all the code is implemented and the tests are passing.

On top of that, there is a static code analysis tool that runs on our continuous integration server. This describes coverage types, such as decision, variable, line and method. These reports aren’t great for telling us that we have tested the right things or that the tests we have are good, but they do a good job of pointing a big, flashing arrow at the parts of our code base that are lacking test coverage.

2. Have a Regression Strategy

One release pattern I see in automation-heavy contexts is releasing when we see a green bar in continuous integration. Every code change we make is accompanied by tests against the code and some programmatic tests that run in the browser. A build might run and report on each of these test types. Once I see that all the test reports are coming back green, meaning that no tests are failing, we are, in theory, ready to send that build to production.

In my experience, this works when there are only a handful of changes being merged into the code base at a time. We introduce more risk — the possibility that something might go wrong in use — into the code base every time we merge a new change, even when that change is well tested on its own. I like to use inventories to manage this risk.

Let’s return to the file categorizing tool I mentioned above. We tested this change thoroughly, in my opinion, and when we merged it into the main development branch, everything looked good. However, there were a few days between that merge and when we pushed to production. Some of these changes were in areas that surrounded what we were working on, so there were a few questions to be answered regarding how everything would go together.

I got with another test specialist who was familiar with the product, and we talked through the different areas that might be affected by these changes. We made a list of places our updated label text was expected to propagate through the system after a change, the different ways a change might come into the system, and the different file types this might happen on.

With this list in hand, we were able to do a targeted regression test pass lasting about 15 minutes after we had a final merge branch. The testing went by quickly because we knew what tasks we needed to perform and where we could find the functionality within our product.

The point here isn’t to maintain a constant running inventory of code changes and how they might ripple through a piece of software, but to be aware of risk and missing coverage. The testing we do during development can lose its meaning as more and more changes are added to the code base.

Remembering what was covered and how that ties into other changes that are happening in tandem leads me to my last point on test selection.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

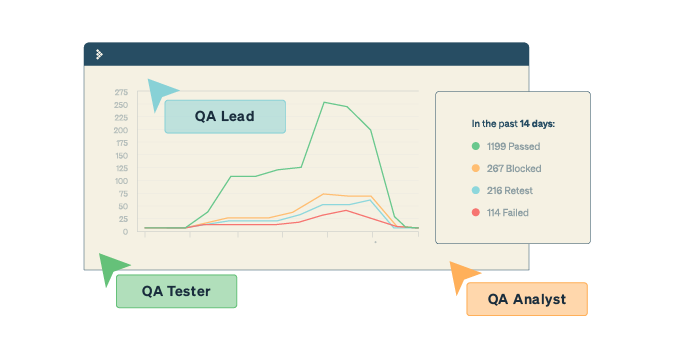

3. Be Aware of Test Selection

I spend most of the time in my daily work grouped with developers. Generally the developers will write product code and the test specialists will either write or assist with the test code. One person will write code, also known as driving, and the others will navigate by making suggestions or mentioning code errors when they see them.

We change drivers at different points in the flow of making a code change. Some people will write a test and then the code to satisfy that test and then switch drivers, while some will go hours at a time and just switch drivers when they feel fatigue. We will often change pairs within the development of a code change as well. It is not uncommon for all but one person, the anchor, to eventually move on to another code change before the one we are currently working on is ready to ship. There are benefits to pair switching, but we also lose a little context each time. I like to mitigate this by doing regular debriefs on test coverage.

Before we start working each morning, I like to quickly talk about what happened yesterday — what we built, how we tested it, and what tasks we are hoping to complete today. I like to walk through the tests in particular. This test debrief helps us to better understand the coverage we have and discover parts of the code base we neglected to test yesterday, and it helps the current anchor be able to explain the changes we have made and the testing we have performed in the case of a pair switch.

We regularly discover problems in implementation, necessary tests that we didn’t think to write, or areas of implementation we initially forgot by doing this daily debrief and focusing on test coverage.

Counting on Coverage

Asking how much testing has been performed and if we are nearly done is completely reasonable. But the reason is also nuanced and difficult to give if we aren’t paying attention.

My strategy is to keep a running tab on the testing we do. For each change, talk about the testing you perform at the various layers — service; unit; model, view or controller; and the user interface — and be able to talk about those tests coherently. Supplement that conversation with data returned from a static analysis tool that runs in continuous integration. Then, talk about the exploration you perform in terms of an inventory of scenarios, variables, configurations and so on.

Being able to speak to each piece of coverage gives a more complete understanding of what is tested, what isn’t and the areas of your product that might be a mystery.

This is a guest posting by Justin Rohrman. Justin has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association For Software Testing Board of Directors as President helping to facilitate and develop various projects.

Cross-Technology | Cross-Device | Cross-Platform