The promise of the agile approach is that we deliver something often. We deliver so we can obtain feedback and discuss what remains with our customer (or Product Owner). And, so we can assess our process and teamwork along the way. (See Feedback & Feedforward for Continuous Improvement for more about this reflection.)

Too often, people think about feature sets as the sum total of everything that could be in that feature set. However, we have alternatives to “all” of it with a variety of minimums.

Define the Various Minimums

We have many possible “minimums” when we think about stories and roadmaps:

- MVE, Minimum Viable Experiment: A story provides feedback for the team and the product owner.

- MVP, Minimum Viable Product: A story or set of stories that has enough functionality for the customer to use, even if it’s not the full functionality.

- MMF: Minimum Marketable Feature: Smallest piece of functionality that has value to the customer.

MVEs can be useful when you want to know if you should even think about this part of a feature set. One team at the Acme company struggled with the feature set called “Reports.” The feature set had several large report features in it: sales by geography, kind of product, aggregated by several customers, and more.

The team knew they needed some sort of database, some sort of configurable output, and some sort of security/privacy because customers could see some of these reports. And, each customer had to have their data private. No customer could see any other customers’ data. They knew they needed at least two levels of privacy: customers and inside the organization.

When they had tried story mapping the reports feature set, they had created more than 20 stories, just for customer-based reports. They decided they needed an MVE to see what the minimum reporting needs were for the customer reports. Maybe they were overanalyzing the customer-based reports feature set.

Because this product required security, both the MVP and the MMF required a database with security features. The team knew better than to start with a database. They needed information before they could possibly create a schema.

They decided on an experiment with fake data to see what the customers really needed. Then they could prune the story backlog.

Experiments Create a Common Agreement on What’s Important

When the team created their original story map for customer reports, they had three levels of security: each customer’s inside salesperson, the customer’s sales manager (or other senior management), and Acme’s product management and salespeople. Acme needed information about their customers and the customers needed security from each other, which is why they had a different level of security.

The initial experiment was simple: Given a small set of products, how did the customers need to visualize the data?

The team considered mocking up reports in a flat-file. A tester suggested they create paper prototypes that would be easy to change on the fly, if necessary.

The team (including the Product Owner) spent an hour creating a variety of paper prototypes and the flow they expected the customer would like. They called the product manager who had visited a customer the week before. They walked him through one flow.

The product manager accepted the first flow and rejected the next three and explained why. The explanations surprised both the Product Owner and the rest of the team. They learned a lot from that experiment and were able to postpone a number of stories from the Reports feature set–all in one day of work.

Now, they needed to consider the schema. They knew they wouldn’t have even an MVP without the necessary security. That meant they had to build in not just security but performance.

Spikes Help the Entire Team Learn Together

This team had tried one-day spikes in the past and liked them. They had a typical flow:

- The entire team met for up to 20 minutes in the morning (at 9am) to review the story. The team often needed only five minutes, but they allocated 20 minutes.

- The team decided how to work together. In this case, two developers paired on the schema, two testers paired on the automated tests, and the other developer made sure he created hooks for the testers to hook into the database. They decided to develop and test everything below the GUI.

- The team separated into their subgroups and worked for an hour.

- Every hour, they met again for five minutes, as a quick check-in. Was anyone stuck? Were they proceeding as they expected? Did anyone need help? Was anyone done and could help the others in some way?

Their check-ins weren’t traditional standups, where too often, the team focuses on the person. These check-ins allowed the team members to focus on the work.

At the check-in, the team decided if they needed to reconstitute their subteams to change the flow of the work. They did this every hour (with a lunch break) until 4pm, or they were done, whichever came first.

The team stopped at 4pm for several reasons:

- They were tired. They’d focused all day on challenging work.

- If they couldn’t “finish” the spike that day, the work was probably larger than even two days. They needed to regroup and replan.

- If they were done, they could explain what they discovered to the PO.

After the first day, they realized they needed other scenarios for performance and security. It was time to discuss how performance and security worked together and separately with the PO. What could they postpone for performance, especially since they weren’t going to scale the reports yet?

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

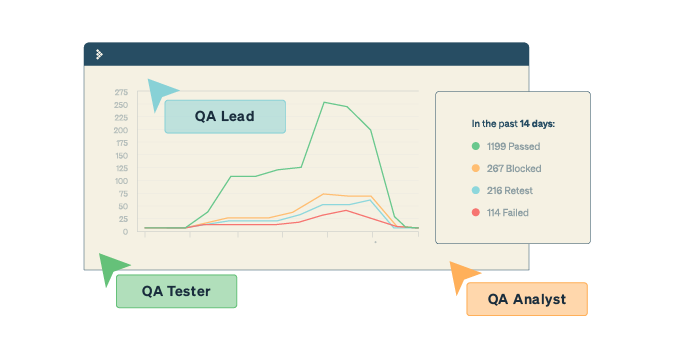

MVPs Provide the Team Feedback

The PO led the team’s discussion about what constituted a real MVP. An MVP means the customers had to be able to use what the team released. They selected five stories and only create this first MVP for the inside salesperson. The team expected to receive feedback from the inside salespeople about the functionality and performance. They would use that feedback for the next few MVPs for Acme’s salespeople.

In this case, the PO had to decide which persona was more important when to sequence the MVPs in a way that made sense. This was a balancing act between feedback for the team and releasing value for the customers.

It took the team several MVPs to create one MMF, a Minimum Marketable Feature. That MMF provided enough value (and product coherence) for all three personas: inside salespeople and their managers, as well as Acme’s sales team.

The smaller you can make your MMFs, the faster the flow of work.

How Small Are Your Minimums?

I regularly work with teams who think an MVE is the same as an MVP and is the same as an MMF. While it’s possible all three are the same, more often they are different.

I find that when teams use one-day spikes, learning together throughout the day, they are more able to differentiate between the various minimums. How small can you make your work and make it valuable? Let us know in a comment below!