I’ve seen a lot of misconceptions, or myths about test-driven development over the years.

Why? Well, it’s hard to say directly why. Indirectly, it’s easy. I’ve spent a lot of the last half-decade not just practicing TDD but teaching it. This includes video courses, blog posts, and working with clients specifically to teach their teams the practice. I have a lot of exposure to people about to learn the practice, so if anyone were going to hear the myths about it, I would.

But it’s harder to pin down where they come from and why. My personal hypothesis is that TDD has emerged, over the years, as The Right Thing (TM). So anyone not doing it these days has a strong incentive to do one of two things.

- Come up with a valid reason not to do it (i.e. “why TDD is bad”).

- Hand-wave a bit to manufacture experience on the subject.

Let me be clear about something. This is entirely rational behavior in a situation where one feels some pressure and perhaps even existential doubt about their job.

But it also serves to muddy the waters. So today, let’s remove some of the mud and clear things up. Let’s look at some myths about test driven development.

TDD Is a Synonym for Unit Testing

I often ask, “Does anyone have prior experience with TDD?”.

“Oh, yes, I’m familiar with it. We wrote ‘JUnits’ and asserts at my last position because the build required minimum test coverage.”

I’ve lost track of how many times I’ve had this very exchange, modulo the particular test framework, language, and code coverage tool. The message is the same, though. “Yes, I wrote some unit tests once.”

Writing unit tests does not mean that you’ve practiced TDD anymore than owning a wrench means you’ve changed your car’s oil. TDD isn’t about writing unit tests. It’s an approach to writing production code that happens to produce unit tests. And it has a very specific cycle of steps that you follow.

Describing those steps in depth would take us beyond the scope of this post, but suffice it to say that it involves writing unit tests, writing production code, and refactoring production code in a very orchestrated and specific sequence. Slapping a few unit tests into your codebase right before committing doesn’t qualify.

With TDD, You Write All Tests Before You Write Production Code

Remember how I just said that there’s a specific sequence of activities for test driven development? Well, guess what that sequence doesn’t ask you to do. It doesn’t ask you to write every test you might conceivably need and then start on your production code, in some kind of waterfall-esque approach within the implementation phase.

People frequently object to the idea of TDD on the grounds that writing all of your tests firsts is silly and wasteful. And they’re completely right. That would be silly and wasteful. Luckily, TDD doesn’t ask you to do that.

Test driven development involves writing a single test that fails and then adding the code necessary to make that test pass. You add tests to your codebase just as you add production code — incrementally and practically.

TDD Practitioners Don’t Bother with Design or Architecture

This particular myth has a bit of contrast with the ones I’ve mentioned so far. Those originate purely from people that haven’t ever actually tried TDD. This one, on the other hand, gets some help from the occasional person that has.

This often happens with people at a company undergoing an agile transformation. They take the principles of YAGNI, emergent design, and TDD, kind of ball them all together, and conflate them with their previous world of a gigantic, seemingly endless “design phase.”

“Awesome! TDD means we don’t have to do that anymore! We can finally just start writing code and not worry about anything else.” Detractors of the practice then seize on this sentiment as some kind of failure inherent to TDD.

It’s not. That’s a myth. TDD is, again, a sequence by which you write code when you’re ready to start writing code. It makes absolutely no prohibition against thinking through your eventual design, looking out for pitfalls, or whiteboarding architecture. You should absolutely do these things, TDD or no TDD.

You Should Do TDD to Get Your Test Coverage Up

Frankly, I could write an entire post about the subject of test coverage and why this is a metric that nobody outside of the dev team should look at. But this isn’t that post, so I’ll just briefly state that I find test coverage to be a frequently problematic metric and most definitely not a first-class goal. Your team’s test coverage should serve only to tip the team off as to where it has untested code.

In light of that take, you can understand why I cringe at the idea that test coverage is a first-class goal and that TDD’s purpose is to improve it. No, no, no!

TDD provides an awful lot of wins: a robust regression test suite, the ability to fearlessly refactor your code, the avoidance of writing unnecessary code, and plenty more. Those are first-class benefits. Test coverage is just a trailing indicator that this has happened if anything.

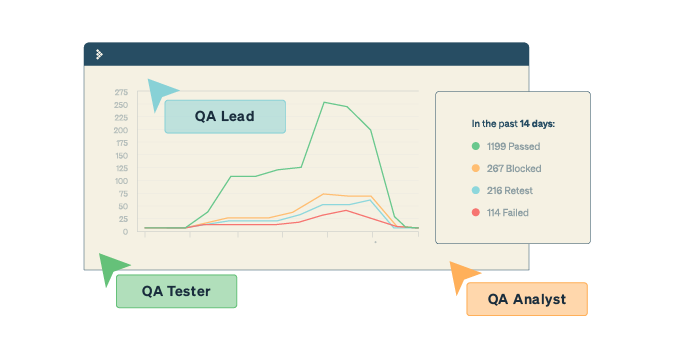

TDD is a QA Strategy and It Can Even Replace QA

I’ll close with perhaps the most business-focused myth that I hear, and potentially the most damaging one. If you’re trying to adopt TDD as an attempt to move QA into the development team or to cut costs by reducing the QA department, you’re headed down a dangerous path.

The term is “test driven development.” It’s a software development technique — not a QA approach. Perhaps the most philosophical and fundamental way that I can describe TDD is to say that it involves breaking your code into tiny pieces of functionality and then carefully defining “done” before you start the next incremental piece. Write a test that fails, but you know that, when it passes, you’ll be done with the current bit you’re working on.

This means that TDD produces exactly as many tests as it takes to get done, and not one more. So no comprehensive edge case testing. No smoke tests and no-load tests. No exploratory tests. You swap TDD for QA at your extreme peril.

This, like all the other myths, involves fundamental misunderstandings about the nature of TDD. TDD is a simple but powerful technique for writing code and one that happens to produce a lot of collateral good. So do yourself a favor and give it a serious try before jumping to conclusions about what it is, what it isn’t, and what it lets you do.