Good performance is a must for any application, whether it’s in your cell phone, in a desktop computer or out on the cloud. Thus, before any application can make its way into production, it must be tested to make sure that it meets—or, hopefully, exceeds—the required performance standards.

This is easier said than done because there is no single metric you can use to determine the quality of an application’s performance. Rather, performance testing requires that you measure a variety of metrics that relate to the different aspects of an application’s execution.

Understanding the different aspects of application performance is critical when planning tests to determine an application’s quality. Here, let’s delve into these different aspects of computing performance and how to incorporate them into your test planning.

Get TestRail FREE for 30 days!

The Different Aspects of Computing Performance

Computing can be divided into five operational aspects: algorithm, CPU, memory, storage and input/output (I/O). The table below describes how these aspects relate to application performance.

| Aspect | Description | Relevant Testing Metrics |

|---|---|---|

| Algorithm | The calculations, rules and high-level logic instructions defined in a program to be executed in a computing environment | Rule(s) assertion pass/fail, UI test pass/fail, database query execution speed, programming statement execution speed |

| CPU | The central processing unit is circuitry within a computing device that carries out programming instructions. CPUs execute low-level arithmetic, logic, control and input/output operations specified by the programming instruction | Clock speed, MIPS (millions of instructions per second), instruction set latency period, CPU utilization |

| Memory | The computer hardware that stores information for immediate use in a computing environment, typically referred to as Random Access Memory (RAM) | Memory speed per access step size, memory speed per block size |

| Storage | Computer hardware that stores data for persistent use. The storage hardware can be internal to a given computing device or in a remote facility on a dedicated storage device | Disk capacity utilization, disk read/write rate |

| I/O | Describes the transfer of data in and among computing devices. Internal I/O describes the transfer of data to the CPU, memory, internal disk storage and to interface to external networks. External I/O describes data transfer to and from different points on a network | Network path latency, available peak bandwidth, loss rate, network jitter profile, packet reorder probability |

Measuring the performance of an application in terms of each of these aspects gives you the depth of insight required to determine if your application is ready for production use. How and when you’ll measure these aspects will vary according to the priorities you set in your test plan.

Establishing Priorities in a Test Plan

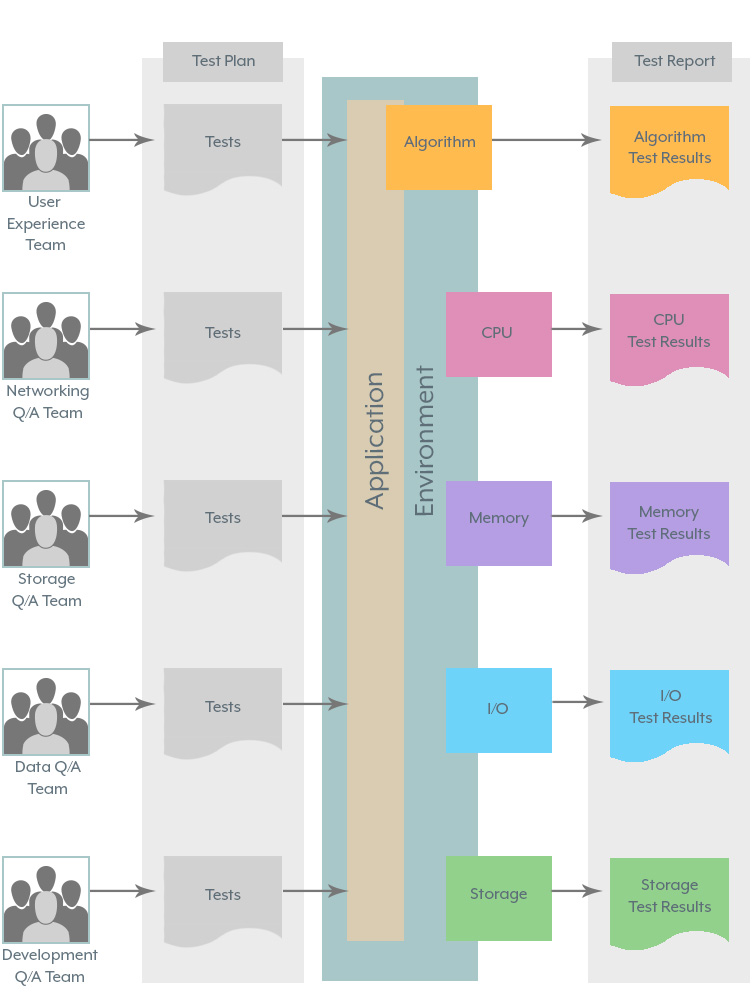

No single testing session can cover all aspects of application performance, and it’s rare for a single team to have the expertise required to test all aspects. Typically, different teams in the quality assurance landscape will focus on a particular aspect of performance testing. The testing activities of each team are organized according to a general test plan, with each part of the test plan defining a particular set of priorities to be examined.

For example, the networking QA team will design and execute networking tests. The customer experience QA team will implement UI functional testing. The data QA team will performance test data access and query execution. And additional teams with expertise in other aspects will participate according to the need at hand.

The important thing to understand is that acceptable performance is determined in terms of all aspects of your application’s operation, and different teams will have the expertise and test methodologies required to make the determination about a given aspect of performance quality. Therefore, when creating a test plan, you will do well to distribute work according to a team’s expertise. The result of the testing done by all the teams gets aggregated into a final test report, which influences the decision to release the software to production (see figure 2 below).

Many teams are required in order to test the performance quality of an application

The Importance of Environment Consistency

As mentioned above, comprehensive testing requires that all aspects of application performance be tested, measured and evaluated. Of equal importance is that the environment in which the application is tested be identical to the production environment into which the application will be deployed.

The code in the testing environment probably will be the same code that will be deployed to production, but the code might vary between a test environment and production due to different configuration settings and environment variable values.

Tests that depend on the physical environment are another matter. Fully emulating the production environment in a testing scenario can be an expensive undertaking. Thus, requiring full emulation depends on the aspect of testing in play. When testing for algorithm, in terms of pass/fail of a particular rule or UI response, the speed of execution does not really matter—for example, testing login and authentication works according to credentials submitted. However, when testing that login happens within the time advertised in the application’s service level agreement, environment consistency between testing and production environments becomes critical. It’s a matter of testing apples to apples.

One of the benefits of automation, virtualization and cloud computing is that production-level test environments can be created on an as-needed basis, in an ephemeral manner. In other words, if your production environment is an industrial-strength, AWS m5.24xlarge environment (96 CPU, 384 GB memory, 10,000 Mbps network bandwidth), you can create an identical environment for testing, keeping it up and running only for the setup and duration of the test. Once the test is over, you destroy the environment, thus limiting your operational costs. Ephemeral provisioning not only provides the environmental consistency required to conduct accurate testing over all five aspects of performance testing, but also allows you to test in a cost-effective manner.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

Conclusion

No single test provides an accurate description of an application’s performance, any more than a single touch of an elephant describes the entire animal. A comprehensive test plan will measure system performance in terms of all five aspects. Conducting a thorough examination of an application by evaluating the metrics associated with the five aspects of application performance is critical for ensuring that your code is ready to meet the demands of the users it’s intended to serve.

Article by Bob Reselman; nationally-known software developer, system architect, industry analyst and technical writer/journalist. Bob has written many books on computer programming and dozens of articles about topics related to software development technologies and techniques, as well as the culture of software development. Bob is a former Principal Consultant for Cap Gemini and Platform Architect for the computer manufacturer, Gateway. Bob lives in Los Angeles. In addition to his software development and testing activities, Bob is in the process of writing a book about the impact of automation on human employment. He lives in Los Angeles and can be reached on LinkedIn at www.linkedin.com/in/bobreselman.

Test Automation – Anywhere, Anytime