It’s a fact of life in quality assurance circles: Any line of code that hasn’t been exercised in a test is a potential risk. Thus, test practitioners use a metric called the code coverage percentage to determine how much of the code that’s been written has actually been exercised in testing. Many companies that support automated testing incorporate some degree of code coverage reporting into their test evaluation processes, and most of the popular programming frameworks have code coverage tools that integrate into the testing process.

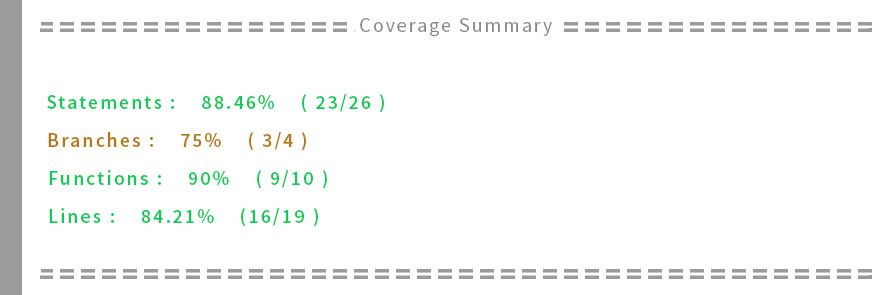

Most companies that support code coverage also require that developers write unit tests that cover code to a predefined minimum standard; percentage varies by company. Some companies require 80% coverage, others 90%, and still others more or less. But there is a problem. Simply mandating a code coverage percentage that unit tests must achieve does not automatically translate into less risky code. It’s very possible to have tests that produce a high code coverage percentage while the underlying code has serious flaws. For example, take a look the code coverage report below in figure 1.

Get TestRail FREE for 30 days!

figure 1: A code coverage report with acceptable percentages

The coverage report was created using Istanbul, a code coverage reporting tool that integrates with NodeJs. At first glance, the coverage report seems perfectly acceptable. There are no failing tests; more than 88 percent of the statements have been exercised; 75 percent of the logical branches (e.g., “if” statements) have been touched; nine out of the 10 functions in the code have been exercised; and 84.21 percent of the lines of code have been reached and run.

What’s not to like? Should a company have a policy that unit tests must be written to 80 percent coverage, this code is good to go.

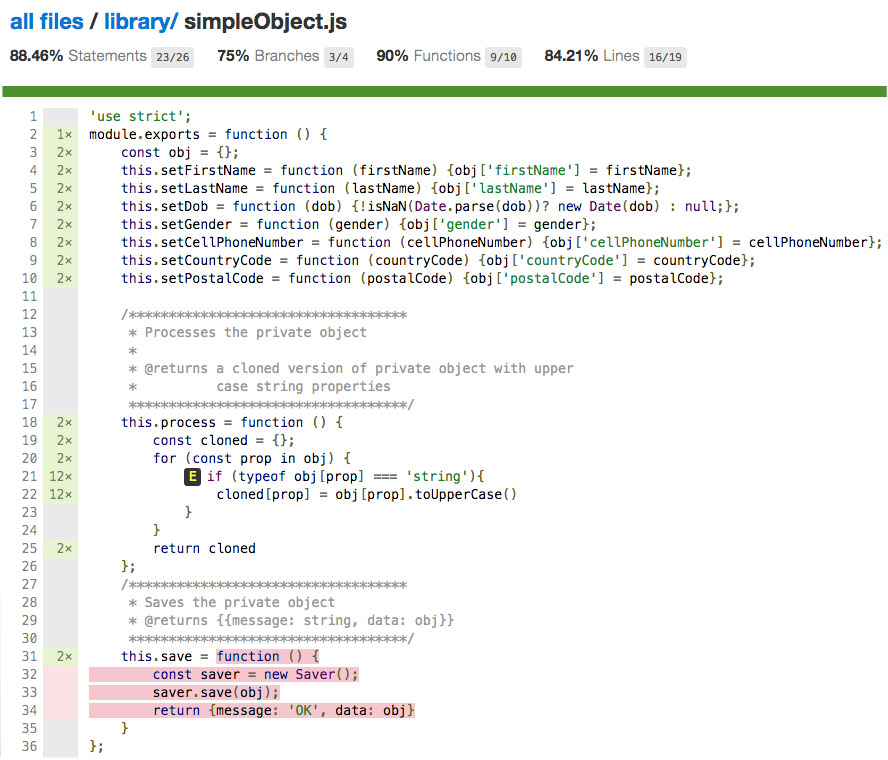

Now, take a look at the reality, as shown below in the code coverage detail in figure 2.

figure 2: A coverage report indicating unexercised code

Notice that the function save() at line 31 has not been exercised, as indicated by the red highlight. And, were it to be exercised, it will fail because the code is trying to create an object from a nonexistent class, Saver. Also, think about this: How important is the function save to the proper execution of this code? I’ll say very important, at least greater than the approximate 15 percent risk factor that one can infer from the coverage report. (The logic is that if 85 percent of the code is covered, there is a 15 percent risk of system failure due to noncoverage.)

If this code — which, again, passes a standard of 80 percent coverage — were to go into production, I’d wager that the number of complaints sent in from end users would be much greater than 15 percent of all the complaints received. In other words, 15 percent of the lines of code may very well cause 100 percent of the problems.

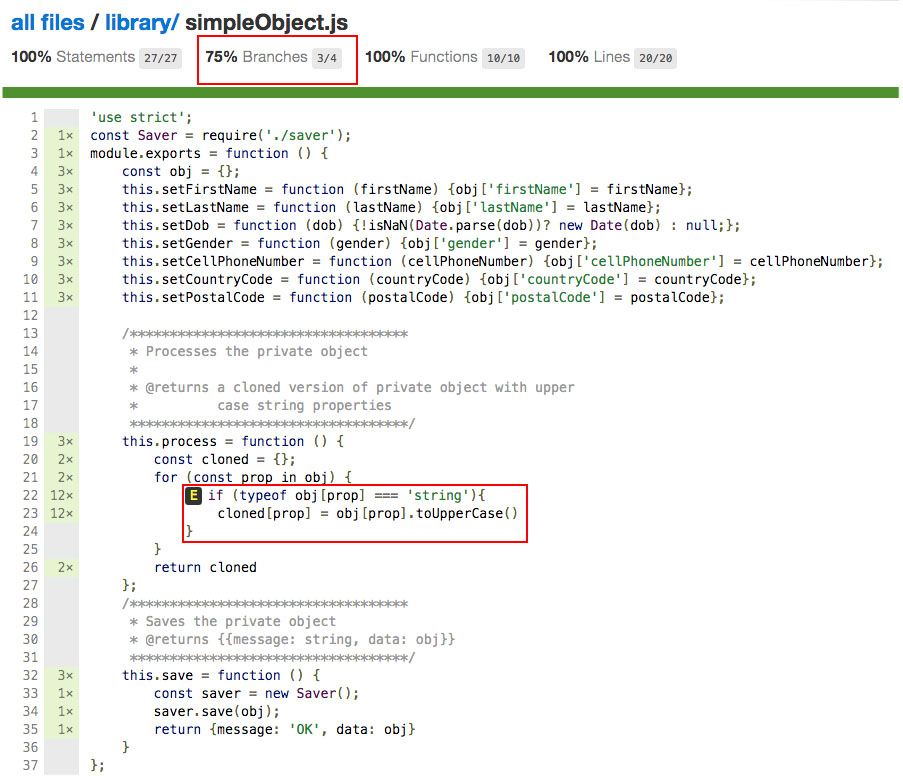

So, what’s to be done? Should the company require that all unit testing cover 100 percent of the code? If so, let’s look at another coverage report, shown below in Figure 3.

figure 3: A unit test that produces 100 percent coverage of statements, functions and lines, yet falls short on branch coverage

Figure 3 is a nearly perfect coverage report. All the unit tests run over the code pass. The result is 100 percent coverage of statements, functions and lines. But the branch count, outlined at the top in red, is only 75 percent. Why? The tool is interpreting that the if statement at line 22 has no alternative else clause.

Of course there is no else clause! The application logic is such that the if statement is doing a check to determine whether the type of a value is a string. That’s all it’s supposed to do. Yet, the tool reports that not all branches have been covered — effectively, this code can never achieve branch coverage of 100 percent. If a 100 percent coverage requirement were in place and strictly enforced on all aspects of the code, this would never be released, despite the fact that it’s healthy code.

Thus, in this case and for most other cases, requiring 100 percent code coverage for release is not realistic. Yet, some sort of standard is still needed, otherwise any code can be released.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

What’s to be done?

The solution is a matter of policy. Simply issuing edicts defining coverage percentage is not enough. Code coverage requirements need to be defined in terms of the context of a testing policy. A testing policy also needs to be defined according to the requirements and expectations for the code being developed.

Defining coverage policy requires a team effort. No single person can do an adequate job of defining coverage policy. It’s akin to the parable of the blind men and the elephant. One view of the situation produces an inaccurate description, so many views are needed.

Test policy at the code coverage level should ensure that all functions are be exercised in terms of common and extreme use cases, as well as happy and unhappy path testing. Branch coverage needs to be implemented in terms of the expected conditions described in the code. The actual percentage of coverage to achieve will vary according to policy; some conditions will warrant 100 percent coverage, while others, such as branch coverage, may require less.

What’s important to remember is that code coverage should meet not the minimum standard to ensure quality code, but the best standard. That standard is best determined by all those whose responsibility it is to get the code through the software development lifecycle and into the hands of the public it’s intended to benefit.

Code coverage is an indispensible metric for determining code quality. However, standards must be set judiciously. Otherwise, the expense of testing can outweigh the benefits that result. It’s senseless to invest time trying to meet standards that are unachievable. It’s equally senseless to set standards that do not provide the degree of inspection required to produce quality code. Determining an appropriate code coverage policy can be challenging, but it’s a challenge that can be met when addressed collectively and cooperatively by all those involved in the development process, from developer to release personnel.

Article by Bob Reselman; nationally-known software developer, system architect, industry analyst and technical writer/journalist. Bob has written many books on computer programming and dozens of articles about topics related to software development technologies and techniques, as well as the culture of software development. Bob is a former Principal Consultant for Cap Gemini and Platform Architect for the computer manufacturer, Gateway. Bob lives in Los Angeles. In addition to his software development and testing activities, Bob is in the process of writing a book about the impact of automation on human employment. He lives in Los Angeles and can be reached on LinkedIn at www.linkedin.com/in/bobreselman.

Test Automation – Anywhere, Anytime