This is a guest posting by Jim Holmes

Test automation, especially at the User Interface (UI) or end-to-end level, can be a huge hit to a team’s productivity, effectiveness, and morale. Bad test suites require an extraordinary amount of care and maintenance, and many organizations end up shutting off or ignoring entire swaths of their suites.

Bad test suites can destroy an organization’s trust in the team’s ability to deliver quality software. That may seem hyperbolic and overstated, but it’s not. Organizational leaders see lots of time spent writing tests without any clear benefit. Moreover, organizational leaders see continued test failures and large amounts of time invested in maintaining brittle test suites, yet with no discernible benefit to the systems/products the team is shipping.

It doesn’t have to be this way!

A few simple concepts, applied with careful thought and discipline, can reap tremendous benefits for teams working with test automation. This article focuses on UI test automation; however, several of the ideas apply to many types of automated testing.

Get TestRail FREE for 30 days!

1: Avoid Poorly Written Code

Test code IS production code. Let’s repeat that: Test code IS production code.

Test automation code needs to be focused on value, clarity, and maintainability—the same things great teams work hard at in their code for web services, data access, security, etc. Mature delivery teams have learned the hard lessons around the long-term costs for taking shortcuts and writing sloppy code. Mature software organizations understand the US Navy SEALS’ mantra “Slow is smooth. Smooth is fast.”

Your test automation suites need to be treated with the same care you’re (hopefully!) using for your system code. This means using the same principles and practices that make for good system code. Unfortunately, too often UI test suites are written by testers who are new to coding and are missing some critical fundamentals.

One of the best approaches to get teams writing high-value, stable test suites is to pair up testers and developers. Writing test automation together has a number of concrete, measurable benefits, including:

- Simpler code. Complexity kills. Less-experienced testers generally don’t understand this, and tend to write overly-complex tests that require lots of maintenance.

- Less duplication. Duplication is expensive. Bad code replicated through a codebase is a poison. The Don’t Repeat Yourself (DRY) principle has been one of the most talked about practices in software engineering for decades. Good software developers have deeply internalized this idea and practice it instinctively.

- Clarity of intent. Confusing code is unmaintainable. How can you fix, improve, or extend something if you can’t figure out what it does. Good devs can teach testers how to better name, organize, and write understandable code.

Dev-tester pairing has the additional benefit of helping devs improve their own testing skills!

2. Improve Your Locator Strategy

Locators are perhaps the single-most important aspect of how UI test tools work. Locators specify how the test tool finds items on a screen, page, view, window, etc. Locators enable the test tool to find and click buttons, input text into fields on a form, extract values from a report, and so on.

The exact mechanics of how locators work varies both by the test tool (Ranorex Studio or their in-beta web testing tool WebTestIt, for example), and by the application’s display technology (HTML, Silverlight, Windows Forms, Oracle, SAP, etc.). Moreover, the entire application design and technical stack can contribute to the challenges of building solid locators.

(Sidebar: “Locators” are referred to several different ways depending on the application’s user interface technology, the tool you’re using for automation, and the team you’re working with. You may also hear locators referred to as object identifiers, element identifiers, find logic, accessibility identifiers, and other terms. Please don’t get hung up on the exact terminology in this article—focus instead on the more critical foundational concepts.)

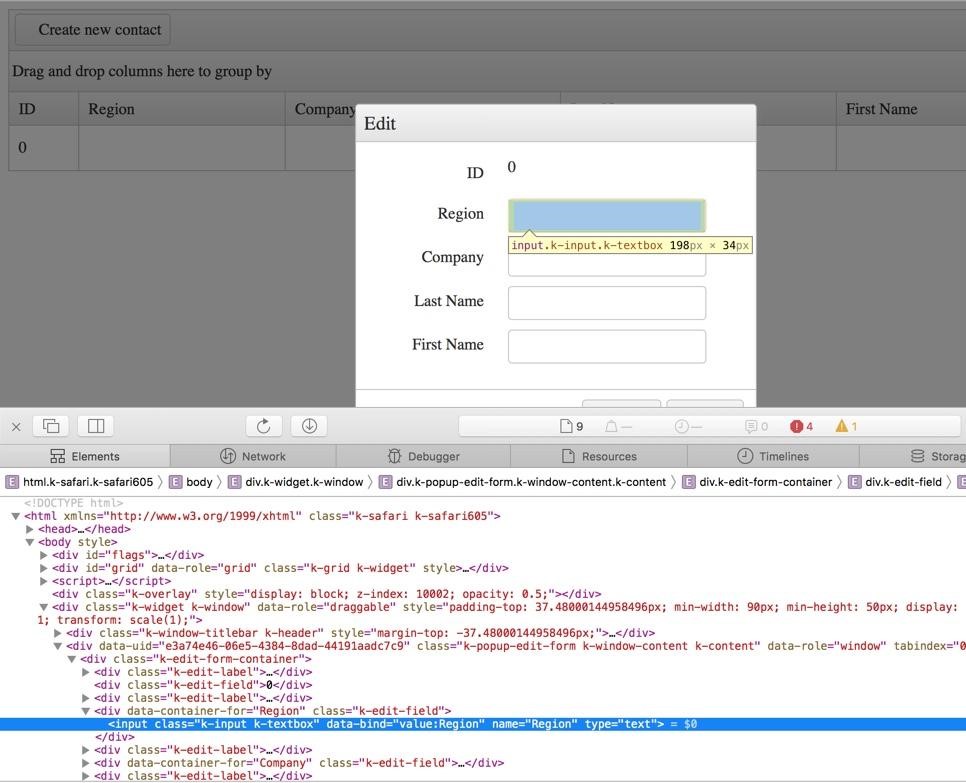

Imagine you’re writing a test for a contact management system. You need to automate a check around creating a new contact, using a screen akin to the one shown below:

The test script needs to do these steps:

- Load the page

- Click a contact button

- Input text in various fields of the resulting popup dialog

- Click the button to submit that dialog

- Find the new record on the grid and verify its correctness

Locators come in to play in every step after the initial page load. Getting this right is critical for a stable, valuable test!

Locators are generally based on attributes or metadata on elements and objects in the UI. HTML gives access to properties such as ID and Name. Several of these properties can be seen in the image above. iOS applications offer up automation ID properties. Windows forms and XAML applications (Sliverlight, WPF) have their own metadata that can be used for locators.

Good locators have the following aspects:

- Generally they’re not tied to an element’s position in the page or view’s hierarchy. In other words, moving a text box to a different place on the screen should not break your locator. XPath is the prime offender here. While XPath can be a great tool, too often locators using XPath are fixed to multiple specific positions of elements in a page’s hierarchy. Prefer locators based on ID, Name, or similar attributes/properties.

- Locators should not rely on dynamically created attributes or properties. Some frameworks and UI components are notorious for dynamically generating ID or other common attributes. In these situations, prefer using attributes you can control or modify. Often developers can alter the behavior of UI technologies and stacks to make locators more stable, including basing them off customized metadata specific to the particular element—think of including a part number or order ID in a grid row.

Learning how to best leverage your system’s UI and associated display frameworks to create solid locators is a tremendous step in creating high-value, low-maintenance UI tests.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

3. Use Page Object Patterns

Right on the heels of understanding good locator strategies comes a related critical concept: making use of the Page Object Pattern (POP). Page Objects are borne from the DRY (Don’t Repeat Yourself) principle mentioned earlier. Badly written UI test suites scatter locators across multiple test files, thereby causing extreme grief when the page under test changes in any fashion.

The idea of Page Objects is to encapsulate locators and behaviors related to the page in one specific class. This ensures that when your UI changes (not if!), you’ll generally only have to go to one place to update your tests.

Moreover, good Page Objects abstract, or hide, tricky page or view behavior so that test scripts focus on the larger use case instead of knowing how to deal with specifics about the display technology. A simple example of a Page Object for the contact grid shown earlier is shown below.

public class ContactGridPageObject

{

public static string GRID_ID = "grid";

public static string CREATE_BTN_ID = "create_btn";

public IWebElement CreateButton;

private IWebDriver browser;

public ContactGridPageObject (IWebDriver browser)

{

this.browser = browser;

WebDriverWait wait =

new WebDriverWait(browser, TimeSpan.FromSeconds(30));

wait.Until(ExpectedConditions.ElementExists(

By.Id(CREATE_BTN_ID)));

CreateButton = browser.FindElement(

By.Id(CREATE_BTN_ID));

}

public IWebElement GetContactGrid()

{

return browser.FindElement(By.Id(GRID_ID));

}

public IWebElement GetCreateButton()

{

return browser.FindElement(By.Id(CREATE_BTN_ID));

}

public ContactPopUpPageObject GetContactPopUp()

{

CreateButton.Click();

ContactPopUpPageObject popup =

new ContactPopUpPageObject(browser);

return popup;

}

public IWebElement GetGridRowById(string rowId)

{

return browser.FindElement(By.Id(rowId));

}

/*

* Grid as currently configured appends contact's

* LName to the dynamically generated ID,

* in the form of

* 48-Holmes

* Ergo, we can use the CSS selector id$="Holmes" to match.

*/

public IWebElement GetGridRowByIdSubstringContactName( string contactName)

{

string selector = "tr[id$='" + contactName + "']";

return browser.FindElement(By.CssSelector(selector));

}

You don’t need to understand much about the page itself to get an idea how this Page Object helps create cleaner tests: a test script can simply request to click a button on the grid, or retrieve a row based on a contact’s name. The test doesn’t need to know the specifics of how to access anything on the page; instead the test simply treats the page as offering up various services (get the grid, get a contact) and actions (click a button, etc.)

Page Objects offer one of the most significant benefits of all to any UI test automation test suite.

4. Learn to Handle Asynchronous Actions

Asynchronous actions are those where the system is not blocked or locked while waiting for the action to complete. In these situations a test script may proceed with its actions before the system has completed the overall action. Think of using a menu on a web page to filter various search results. The system may continue to work while a separate process or thread handles updating the search list. As a result, a test script may fail because it’s expecting the list to be immediately filtered, when in reality there’s a short delay.

Asynch is one of the most troublesome situations for any UI tester to deal with. Even experienced coders can suffer large amounts of frustration when trying to deal with asynch situations.

Continuing with the example of the contact grid, clicking on the “Create new contact” button shown earlier causes the system to display a pop up dialog. There’s actually a short delay, measured in milliseconds, between clicking the button and the dialog actually appearing and being ready for input by the user. This tiny delay is rarely apparent to the human eye, but it wreaks havoc with automation scripts as they don’t pause at all. Instead they’re likely to fail because they can’t find the pop up to interact with!

Thankfully, every modern, respectable UI test automation framework or toolset gives you the capability to solve async situations in a stable, reliable fashion. The premise is fairly simple: Wait for the exact condition you need for the next step in your script before actually taking that next step. That may sound flippant, but it’s not!

In this specific case, the idea is to click the “Create new contact” button, then wait for a text field on the dialog to exist and be ready for input. A snippet of code using Selenium WebDriver’s C# bindings is shown below. WebDriver uses the WebDriverWait class and ExpectedConditions mechanisms to let coders handle specific async situations in flexible, stable approaches.

wait = new WebDriverWait(browser, new TimeSpan(30000000));

wait.Until(ExpectedConditions.ElementExists(By.Id("create_btn")));

browser.FindElement(By.Id("create_btn")).Click();

wait.Until(ExpectedConditions.ElementExists(By.Name("Region")));

browser.FindElement(By.Name("Region")).SendKeys("Bellvue");

browser.FindElement(By.Name("Company")).SendKeys("Companions United");

browser.FindElement(By.Name("LName")).SendKeys("Whitehall");

browser.FindElement(By.Name("FName")).SendKeys("Raj");

browser.FindElement(By.ClassName("k-grid-update")).Click();

Note that the script first waits for the Create button to exist before clicking it. The test then waits for the Region field to appear, then fills out the various following fields, and finally submits the dialog.

This pattern of wait-for-what-you-need-before-you-use-it will solve 90% of the asynch problems most teams run across.

5. Avoid Thread.Sleep

Tip #5 is closely tied to #4 and lies in the same domain: properly handling asynchronous actions. This tip is from a problem so common and so bad that it deserves its own spot on the list: Avoid using Thread.Sleep() in any of your tests.

Thread.Sleep(), or whatever equivalent is similar in the platform you’re working on, is an awful solution to intermittent test failures for asynchronous situations. It’s tempting to simply throw in a hard-wired delay and move on; however, those delays can add up to minutes or even hours added to an automation suite’s execution time.

All mature test frameworks have the equivalent of WebDriverWait shown in the previous example. Such wait mechanisms use a polling and timeout check to handle async situations. These mechanisms allow the test tool to pause and wait for the async action to complete, yet still provide a timeout to ensure the test doesn’t get stuck in an endless loop if the async never completes or fails. These waits are roughly equivalent to a while-do loop. Imagine a wait condition looking for a button to be ready to click:

- While the timeout hasn’t expired, repeat the following:

- Check to see if the button exists and is ready. If not, return to step 1

With this model the test only waits the minimum amount of time necessary for the async action to complete. There’s no wasted time.

Conversely, Thread.Sleep(), Pause(), or the equivalent in other language platforms, is a hardwired delay. Often inexperienced testers will use a default time of 30 seconds. That means the test script will always pause for 30 seconds, regardless of when the async completes.

In the example above, that means the test will always stop for 30 seconds to delay for the button to appear and be ready—even if it only took 5 milliseconds for the button.

Imagine those hardwired delays scattered throughout a large test suite. You can see it can quickly add up to minutes. If those delays are part of often-used actions such as logging on then you can literally be adding hours to your suite’s execution time.

Thread.Sleep() or the like are handy troubleshooting techniques when trying to isolate odd timing issues in your scripts; however, they should rarely make it in to production test suites. Experienced teams will have only a tiny number, ten or fewer, in a suite of thousands of tests.

Keep Test Suites Clean, Flexible, and Fast

Writing good automated test suites requires the same diligence as writing good system code: careful planning, a focus on value, and some thoughtfully applied skill and practices.

Use these five steps to help keep your test suites running smoothly without the painful maintenance you’ll otherwise incur!

Jim is an Executive Consultant at Pillar Technology where he works with organizations trying to improve their software delivery process. He’s also the owner/principal of Guidepost Systems which lets him engage directly with struggling organizations. He has been in various corners of the IT world since joining the US Air Force in 1982. He’s spent time in LAN/WAN and server management roles in addition to many years helping teams and customers deliver great systems. Jim has worked with organizations ranging from start ups to Fortune 10 companies to improve their delivery processes and ship better value to their customers. When not at work you might find Jim in the kitchen with a glass of wine, playing Xbox, hiking with his family, or banished to the garage while trying to practice his guitar.

Test Automation – Anywhere, Anytime