Serena, the test manager was concerned. Up until a month ago, they operated under a strict waterfall environment. As much as she’d tried, the testers had no time to automate many of their tests.

Now, they were moving to agile approaches. A month ago, they’d created cross-functional teams. The testers now worked alongside the developers. The testers tried to maintain the same pace as the developers. That meant their test automation was inconsistent.

She understood why. They’d started way behind with no automation. Now, they had a variety of cobbled-together scripts and tools. However, they didn’t have any end-to-end system test automation and no smoke tests for the builds.

She’d investigated several test automation tools and nothing was quite right as a total solution. She didn’t want to customize a commercial tool. She’d been down that path before and people spent more time maintaining the tool than they did testing.

She decided to ask her colleague, Dave, the development manager. She wondered if he’d seen this in development. She arranged a one-on-one with him that afternoon. They met in the kitchen, over coffee.

“Dave, how did you get to one development environment for all the pieces of the product?” Serena asked.

Dave laughed. “I didn’t. We still use several development environments: one for the platform, one for the mobile parts of the product, and one for the financials. We can’t use just one development environment. That’s why we develop in several languages and scripts. This product isn’t simple to build or to package.”

Serena leaned back. Maybe she’d misjudged the problem. “How did you decide on what you’re doing now?”

Dave sipped his coffee. “We did a series of experiments. We’re experimenting now with our scripting to package. We were able to reduce the mobile footprint last week. Did you notice?”

Serena nodded. “I did. Our mobile customers are pretty happy about that—well, the ones we rolled it out to.”

“Yeah,” Dave said, “We don’t roll everything out to everyone at the same time, and that’s part of our experimentation, too.” He paused. “We had a jump on you with this agile stuff. We started to work by hypothesis and experiment a couple of years ago. You never had the chance. You folks were always reacting to what we threw over the wall.”

Serena nodded. “Well, I wish things were different, too, but this is where we are. Tell me about your hypothesis for the mobile part of the product.”

“We hypothesized that some of our customers didn’t want to run the mobile app, because it was too big, too slow, and definitely clunky,” he said. “We had a memory leak that the testers told us about but we couldn’t find. We decided to refactor our way through it. We asked ourselves what was the smallest chunk we could build and learn from?”

Serena nodded. “The scientific method,” she said. “Good.”

“The team brainstormed seven ideas that might cause the memory leak,” Dave said. “We listed what the cause might be and what we might need to build something to test for the leak.”

“Why seven?” Serena asked.

Dave laughed. “I wanted three. They wanted ten. We compromised at seven,” he said. “It’s a good thing, too. Because it turned out that five of our hypotheses led us to finding and fixing the problem.”

Serena nodded.

“The one thing I insisted on was that we had to do something really small. If the team couldn’t do it in a day, it was too big. Our hypothesis was too big, we wouldn’t be able to collect enough data, and we would learn too late. We had to do something in a day, a short spike. That turned out to be a really good idea.”

“Why?” Serena asked.

“Because that’s how we discovered a cascading memory leak and uncovered some problems nobody had reported yet,” Dave said. “If we’d gone ahead with big things, we would have taken longer. The smaller the hypothesis, the faster it was to build a little something, collect a little data, and learn a little bit. That led us to understanding what we had to do, a little bit at a time.”

Serena nodded.

“You might think of what you’ve done so far in testing as a bunch of larger hypotheses,” Dave said. “How small do you think you can make the next one?”

“Great idea,” Serena said. “Thanks.”

It was time to think small, not large.

Get TestRail FREE for 30 days!

Create a Small Hypothesis

Serena liked the idea that the testers hadn’t wasted their time. They’d created large hypotheses. And, they hadn’t yet followed up with the data.

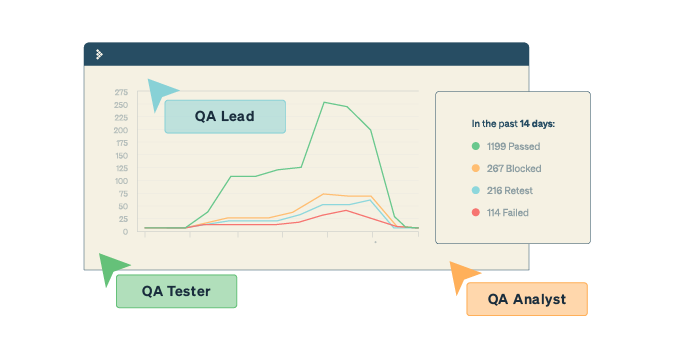

In her weekly testing learning meeting, she asked the testers how they could learn from the automation they’d already done. She proposed these goals:

- Automate the mobile app testing as much as possible, from the API.

- Automate the platform testing, again from the API. This was the best candidate for initial end-to-end testing.

- Automate the financials. They would be able to use golden master or other automated testing. They’d have to look at the reports every so often, but they could verify the data with automation.

She asked this question:

For your part of the product, what is the smallest test you can automate and learn from?

Each tester wrote some notes and suggested their relative sizes:

- Mary and Mark tested the mobile app. They thought their smallest test would take them two days.

- Peter and Perry tested the platform. They thought they could develop a test in less than a day.

- Frank and Fanny tested the financials. They thought they would need three days to develop something they could learn from.

Serena asked this question:

How can the developers help you to finish the automation and learn faster?

They all looked at each other. Peter asked, “Isn’t testing our job? Why would we ask developers for help?”

Serena smiled. “Yes, testing is absolutely your job. And, who are the pros at developing the application, the people who can insert hooks for your testing?

Peter nodded. “The devs,” he said. Sure. Makes sense.”

“Even better,” Serena said, “if you work with the developers, you make this a whole-team problem. Which it is. Your teams can create automation spikes to make the automation part of the regular work.”

Each team member had ideas they could bring back to their product team. Serena took the action item to let Dave and the various Product Owners know that her team wanted to use some team time to create small spikes to experiment.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

Follow the Build-Measure-Learn Loop

In their teams, the testers followed the Build-Measure-Learn loop, first articulated by Eric Ries. The loop is:

- Define your hypothesis.

- Build one small chunk to validate that hypothesis.

- Measure the effects of that small chunk to assess and learn from it.

- Use that learning to either create a new hypothesis or make another decision about the product.

Over the next two months, the teams—not just the testers—created enough test automation that they could start to create automated smoke tests for each build. They still didn’t have end-to-end testing, but they could test the builds without any UI.

As the test base grew, the testers refactored the test designs and tools into just a few tools and consistent tests. They didn’t redesign—they refactored. Every story that touched the “old” testing tools and policies included a little work on the test automation.

Over the next four months, the testers converged on several test automation tools and policies. And, they started to experiment with end-to-end test automation. All along the way, it’s been a team effort, not just a test effort.

Serena hopes the teams will only need another couple of months to test their way to full-enough automation.

This article was written by Johanna Rothman. Johanna, known as the “Pragmatic Manager,” provides frank advice for your tough problems. Her most recent book is “Create Your Successful Agile Project: Collaborate, Measure, Estimate, Deliver.”

Test Automation – Anywhere, Anytime